Ditch the Dev Team: Chat with Your Documents in Minutes, No Coding Required

The beginner-friendly approach to conversational documents.

A very common application of Large language Models (LLMs) is to be able to create chatbots that allow you to chat with documents such as PDFs, DOC, text and CSV files.

There are many vendors that have created wrappers around OpenAI, Anthropic and other LLM’s APIs using tools such as Superagent, LLamaIndex and LangChain, or LangFlow and Flowise, LangChain’s no code visual builders. Those vendors allow companies to deploy their own chatbots as widgets on their websites to allow potential customers to chat about the pains and solutions, pricing and more. Another typical application is for customer service, reducing the number of agents (people) needed to support the customers.

Creating your own solution, although not particularly difficult, is something that more people or businesses are not prepared to do.

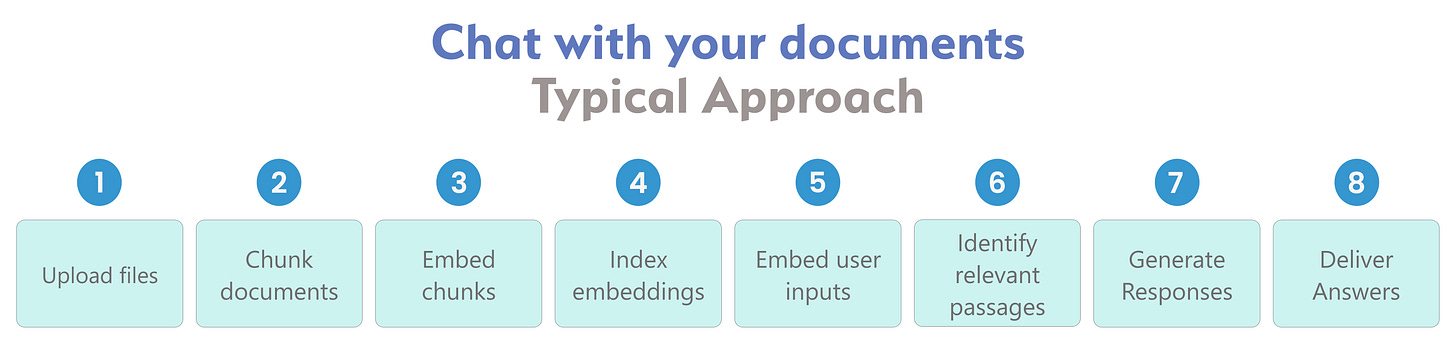

Until recently, the only way that you could create your own solution to create a chatbot capable of asking questions to documents submitted by users, was by following this process:

Ingest Documents. Upload files such as PDFs, Word docs, text documents into our system.

Segment Documents. Known as chunking. Break uploaded documents into smaller sections for easier processing.

Embed Sections. Convert document segments into numerical representations via embedding algorithms.

Index Embeddings. Store embedding representations in a vector database for retrieval.

Embed User Inputs. Convert user questions into embeddings to enable semantic matching.

Identify Relevant Passages. Query vector database to surface document sections most pertinent to the user's inquiry.

Generate Responses. Supply relevant passages to a language model to formulate a response.

Deliver Answers. Return the language model's response to the user.

Seems daunting? It is.

You have to host your code somewhere; you also need to select and pay for a vector database; and you need to manage short and long term memory to keep context.

Fortunately, OpenAI recently launched Assistants. With it you can create them in the playground, without the need to know how to program. They offer an SDK in both Python and JavaScript, and an API endpoint for integrating them in your own chatbot or app.

OpenAI Assistants take care of the chunking, embedding, vector management and short term memory management for you. So it makes things much easier

But don’t worry, even though the Assistant that you create on the playground doesn’t offer an API to easily integrate with your chatbots or apps, there is a very simple no-code solution using Flowise.

The workflow with the OpenAI’s Assistants looks like:

You will need:

To install Flowise somewhere in the cloud.

To have an account with OpenAI, so you can use their APIs.

Then, you can create an Assistant directly in the OpenAI Playground or in Flowise.

In OpenAI Playground and select Assistants:

Then, give it a name, write the instructions, select a model, select Retrieval and add a document. You can add up to 20 documents.

If you want to do more advanced tasks, enable Code Interpreter. With Code Interpreter enabled, your Assistant can write and execute computer code to provide answers. This feature, introduced by OpenAI, allows the chatbot to perform tasks it couldn't do before. For instance, it can carry out complex calculations, generate charts based on user-uploaded data, and more, all through the execution of code.

For our example, I have:

Name: PLG Assistant. PLG stands for Product Led Growth

Instructions: You are an AI assistant that can analyze data and provide insights about it. In particular, related to the uploaded documents.

Model: gpt-4-106-preview

I also uploaded a document: Product-Led Growth_ How to Build a Product That Sells Itself by Wes Bush.pdf. (I hope Wes is fine that I use his book for this example:)

Now the Playground should look like:

Now we can enter a message to test our assistant. Let’s start by asking What questions a person can ask about the document?

Here is the response:

I also clicked on logs to see what the system is doing,

Now let’s ask the LLM one of the questions. Let’s try with:

What are the key components of building a Product-Led foundation for a business?

Great. It works as expected, and it was trivial to implement.

However, a company may want to create a chatbot to allow internal users to chat with internal documents or external users to chat with the company’s product documentation or with documents that can help with customer support, among other use scenarios. In those cases, you would like to call an API to the Assistant you create, from a chatbot platform that can be embedded in a website or that can be accessed directly to allow anyone to ask questions to the uploaded documents.

OpenAI has an SDK in Python and JavaScript, but you need to know how to program to use it. I don’t know why OpenAI didn’t add a button in the playground to access a simple API to call an Assistant and get the results as in the playground. Maybe someday.

Fortunately, Flowise, a no code visual builder that uses the LangChain library can be used for that.

So, let’s go back to installing Flowise.

You can easily install it locally by opening the Command Prompt in your computer (or GIT CMD) and enter:npm install -g flowise

And then enteringnpx flowise start

To run it locally. Then go to

http://localhost:3000/

However, in order to use the endpoint that you will generate with it on a chatbot on another app, you need to host Flowise somewhere. Flowise offers many options to deploy it. You can read about them here.

Probably the easiest one is Railway, but you can choose the one you prefer. Railway offers $5 to try their platform. That should be enough to experiment with the use case of this article. Follow the instructions in the Flowise documentation to deploy it there.

Once you have Flowise installed, you should launch it. If running locally see the instructions above, if running elsewhere, just go to the URL generated by your service.

Then, go to the Assistants tab and you will see:

In there you can add or load an assistant.

Clicking on “Add”, you will get the following form, where you can enter the same type of information you entered in the Assistants playground of OpenAI.

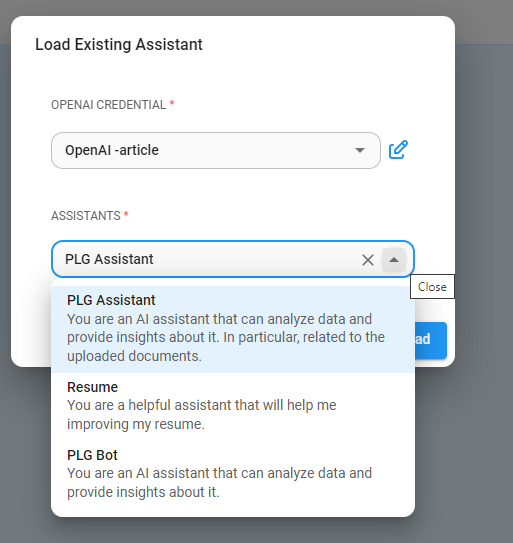

Clicking “Load” will ask you for your OpenAI api key and it will show the Assistants that you have created in the OpenAI playground.

Once you enter your API key and give it a credential name, you can reuse that credential across your flows.

Then, you can choose your Assistant:

In this case the PLG Assistant. You will get the same form that you would get by selecting “Add”. However, in this case everything is prefilled with the info entered in the OpenAI playground.

Click “Add” to finish with this step.

Next, go to “Chatflows” and click on “Add New”.

On the nodes on the left, select “Agents” and drag and drop the OpenAI Assistant to the canvas:

Then, click on “Select Assistant” and choose the one you just loaded:

Save the flow and give it a name, such as PLG Assistant. It doesn’t have to have the same name as the OpenAI Assistant.

Now we can test the flow, as shown in the following image:

Up to here we just replicated the functionality that we had in the OpenAI playground. The advantage of creating this flow in Flowise is that now we can embed it in a website or use it as an API. These are the options:

Selecting Embed allows you to use it as a chatbot widget that you can add to a webpage.

Several aspects of the chatbot can be configured by selecting the option “Show Embedded Chat Config”.

The CURL option shows the API info needed to incorporate it in your own Chatbot or app.

However, when using the Assistants, you need to pass one more parameter to the body of the request: “chatId”: “a unique identifier of the user”.

This is necessary to keep the context for each user, independent of the other users.

Using it with a chatbot builder is as simple of creating a flow similar to this:

This flow was created with Twnel’s chatbot visual builder. Similar flows can be created with tools such as Manychat, UChat, VoiceFlow, and many more.

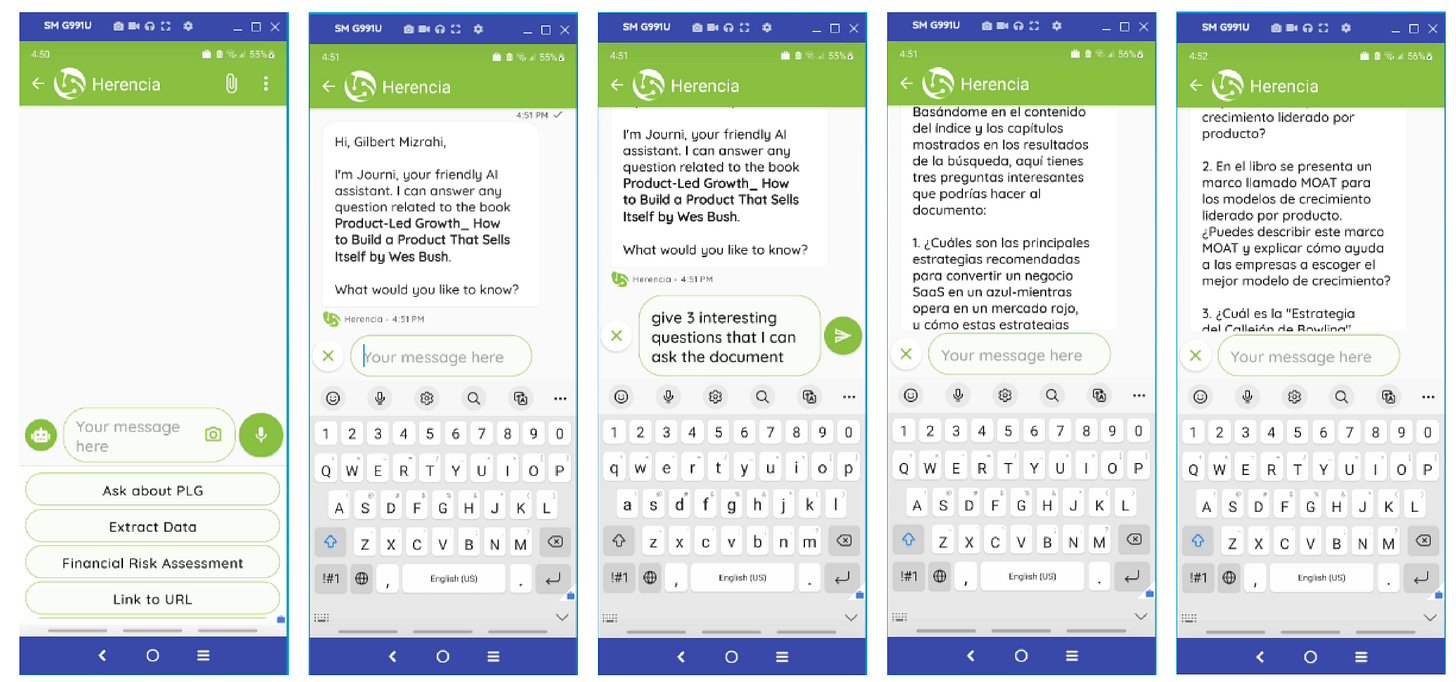

The conversation on the Twnel chatbot looks like:

In this case I changed the prompt in the Assistant a little to ask it to return the response in Spanish. Cool, isn’t it. you can chat with your documents in the language of your choice (if the language is supported by OpenAI).

Advantages

Easy to implement. It doesn’t require any technical knowledge.

You don’t need to understand how a Retrieval-Augmented Generation (RAG) system works. Everything needed is abstracted for us.

You can deploy a chatbot or widget in just a few minutes.

You can enable Code Interpreter easily to perform calculations, produce graphs and much more.

Disadvantages

In my tests, it seems slower to answer that a custom designed RAG.

More expensive to run.

You don’t have control of the settings. OpenAI Assistants are a black box.

It’s limited to a maximum of 20 documents.

I have created SheetSmart, a FREE Google Sheets template powered by ChatGPT.

This video describes some of its capabilities

Conclusion

Harnessing the power of Large Language Models (LLMs) to create chatbots that interact with documents has become significantly more accessible with the advent of OpenAI Assistants.

This innovative solution eliminates the need for complex infrastructure and coding expertise, empowering individuals and organizations alike to unlock valuable insights from their documents with ease.

Whether seeking immediate answers, uncovering hidden connections, or utilizing advanced functionality like Code Interpreter, OpenAI Assistants paired with Flowise provide a streamlined and efficient path to conversational document interaction. By leveraging these tools, you can transform the way you engage with your information, opening a door to greater productivity and deeper understanding.

So, embrace the future of document exploration and start chatting with your files today!

Did you like this article?