Vibe Coding in Action: Rebuilding a Legacy SaaS Feature in 2.5 Hours

A step-by-step story of using Gemini and Lovable to replace a slow, bloated component with a lightweight, multi-feature solution.

There's a new term making waves in the developer community: "vibe coding." It’s a workflow where you steer the development process with a clear vision, using AI assistants to translate that "vibe" into precise, functional code. It's less about writing individual lines and more about directing logic. But is it just a trend for hobby projects, or can it solve real, expensive business problems?

I decided to put it to the test.

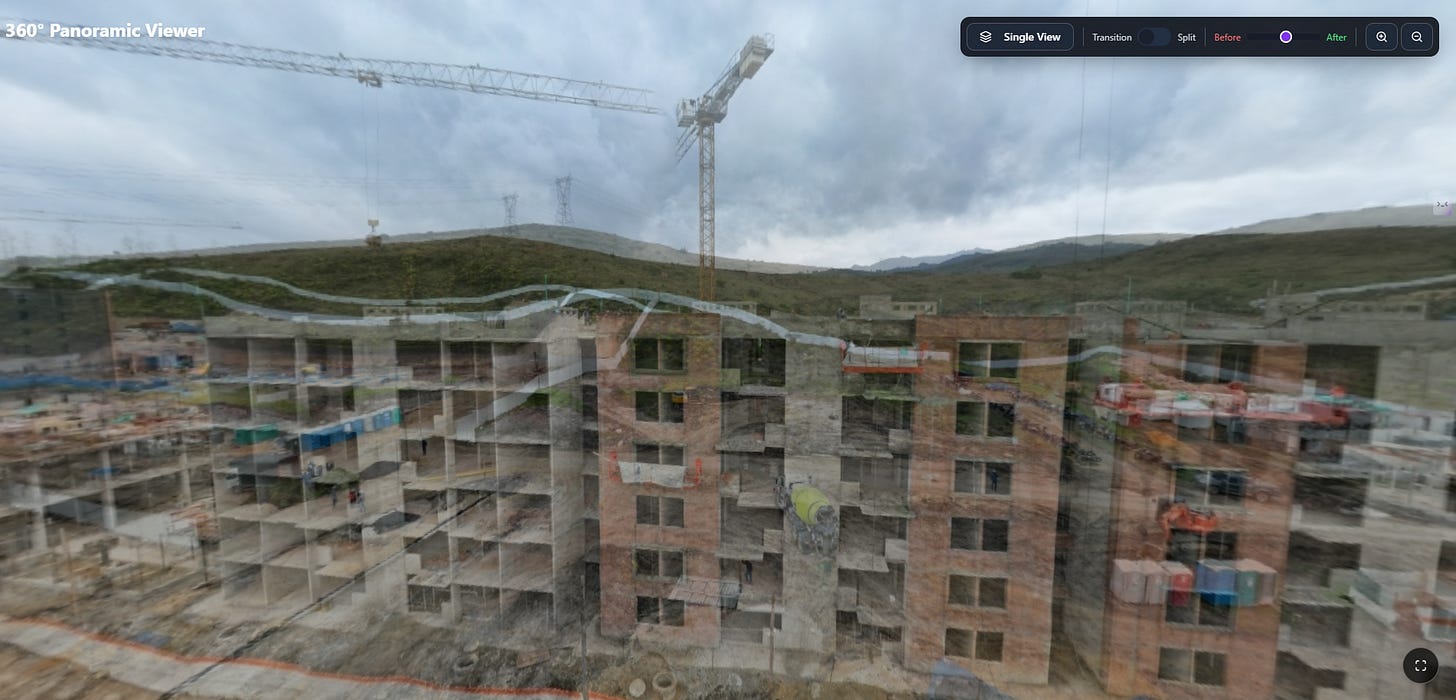

At Procorvus, where I joined as a partner in early 2025 to lead the AI integrations, we had a classic case of tech debt. Our 3D panoramic viewer, a critical feature for our clients to track construction progress, was notoriously heavy, consumed excessive client-side resources, and delivered a sluggish user experience. After a team discussion concluded that fixing it would be a major undertaking, I saw a personal challenge. Although my day-to-day role focuses on AI, I have a developer background, and I was itching to see if this new AI-driven workflow could tackle a tangible problem.

This is the story of how vibe coding allowed me to build a superior replacement component from scratch in just 2.5 hours of focused effort.

Your company URL → AI Analysis → 10 initiatives of AI agents for your business.

That’s it. That’s the tool: IntelleGEN Compass.

It’s free to use.

Step 1: Setting the Vibe with Gemini 2.5 Pro

Every project needs a direction. Before touching any code, I held a strategy session with Google's Gemini 2.5 Pro to help establish the technical "vibe." My initial prompt was intentionally high-level, describing the core goal:

"I want to develop an app that can take 3D images of a construction project and compare them... The user can drag any image to rotate, zoom, etc."

Gemini’s first response was a full-scale, professional development plan, suggesting heavy-duty tools like the Unity or Unreal Engine and data acquisition methods like LiDAR scanning. While impressive, this was overkill. It did, however, help me clarify that a simpler, more elegant solution was needed.

I refined my prompt with an image of a 360° viewer with a comparison slider. Gemini immediately understood the new, leaner vibe. It pivoted, suggesting a more practical, web-based approach and recommended a key piece of technology for the job: photo-sphere-viewer.js, a lightweight and powerful library built on three.js. This suggestion, coming directly from the AI, became the technical foundation for the entire project.

Step 2: The Development Sprint, a Roadblock, and the AI Pivot

For the hands-on coding, I chose to work with Lovable, an AI development environment ideal for rapid iteration. Armed with Gemini's suggestion, I first tried to take a shortcut using a pre-built plugin, thinking it would be the fastest path. I wrote a very specific prompt:

My initial prompt to Lovable:

"I need you to generate the code for a complete, single-file web application... You must use the photo-sphere-viewer.js library (version 4) and the official SplitViewPlugin..."

This is where I hit a classic development roadblock. The plugin didn't behave exactly as I envisioned and was creating frustrating issues. In a traditional workflow, this would have sent me down a deep rabbit hole of debugging someone else's library code, and my 30-minute experiment would have ended right there.

But with an AI partner, this was merely a minor detour. Instead of debugging, I simply changed my instructions, telling Lovable to scrap the plugin and build the entire feature from scratch based on a more fundamental approach. I laid out the new logic step-by-step:

Forget the plugin. Use the core photo-sphere-viewer.js library as the base.

Layer the viewers. Instantiate one viewer for the "before" image and another for the "after" image, positioning the "after" viewer directly on top of the "before" one at the exact same coordinates.

Create a custom slider. Add a simple, draggable HTML element to serve as the handle.

Implement the mask. This was the key: use the slider's horizontal position to dynamically update the CSS clip-path property of the top ("after") viewer. As the user drags the handle, the script precisely updates the mask, revealing the "before" image underneath.

The AI executed this new, more complex logic perfectly. The result was exhilarating. Within that initial 30-minute break, I had a working prototype of the split viewer that was custom-built to my exact specifications.

Step 3: From Prototype to a Multi-Faceted Product

That initial success was the proof I needed. Over the next couple of days, I dedicated another hour to refining the code and a final hour on Sunday to dramatically expand its capabilities and polish the user experience into a professional-grade tool.

The final component now offers three distinct ways to compare the two panoramic images:

Split View: The original concept with the custom-built, draggable vertical slider.

Single View Toggle: Simple buttons that allow the user to instantly switch between the full "before" and "after" panoramas. This is perfect for quick, direct comparisons without any visual clutter.

Transition Slider: An interactive slider that controls the opacity of the top ("after") image. This allows the user to smoothly fade between the two states, making it incredibly easy to spot subtle changes and additions across the entire scene.

To complete the package, I also implemented full-screen capability, ensured the interface was clean and responsive for mobile use, and added intuitive gesture support like pinch-to-zoom and drag-to-pan.

The Procorvus Advantage and the Future

At Procorvus, we provide a comprehensive platform for managing complex construction projects. Our mission is to foster absolute transparency and accountability between architects, contractors, and clients. A slow, clunky viewer isn't just an annoyance; it's a bottleneck to the effective communication that our brand is built on.

This new component, born from a 2.5-hour AI-assisted sprint, is a perfect embodiment of our platform's ethos: powerful, yet incredibly simple and efficient. While the final integration into the Procorvus application is the last step, we anticipate the process will be swift and straightforward, thanks to its self-contained, lightweight design.

This entire experience was a powerful validation of the vibe coding workflow. It allowed me to act as a product architect, translating a clear vision into a high-quality, multi-feature product without getting bogged down in implementation minutiae. It proves that you no longer need to be a full-time, heads-down coder to build elegant solutions to complex problems.

It leads me to a final question for every product leader and engineer reading this: as you look at your own SaaS products, how much of your tech debt is just waiting for a fresh approach? And are you considering how "vibe coding" with AI assistants could be your secret weapon to finally tackle it and rapidly build the elegant, lightweight solutions your users truly deserve?